Query Analysis

Dialogue Manager analyzes user queries to understand information needs and detect potential ambiguities.

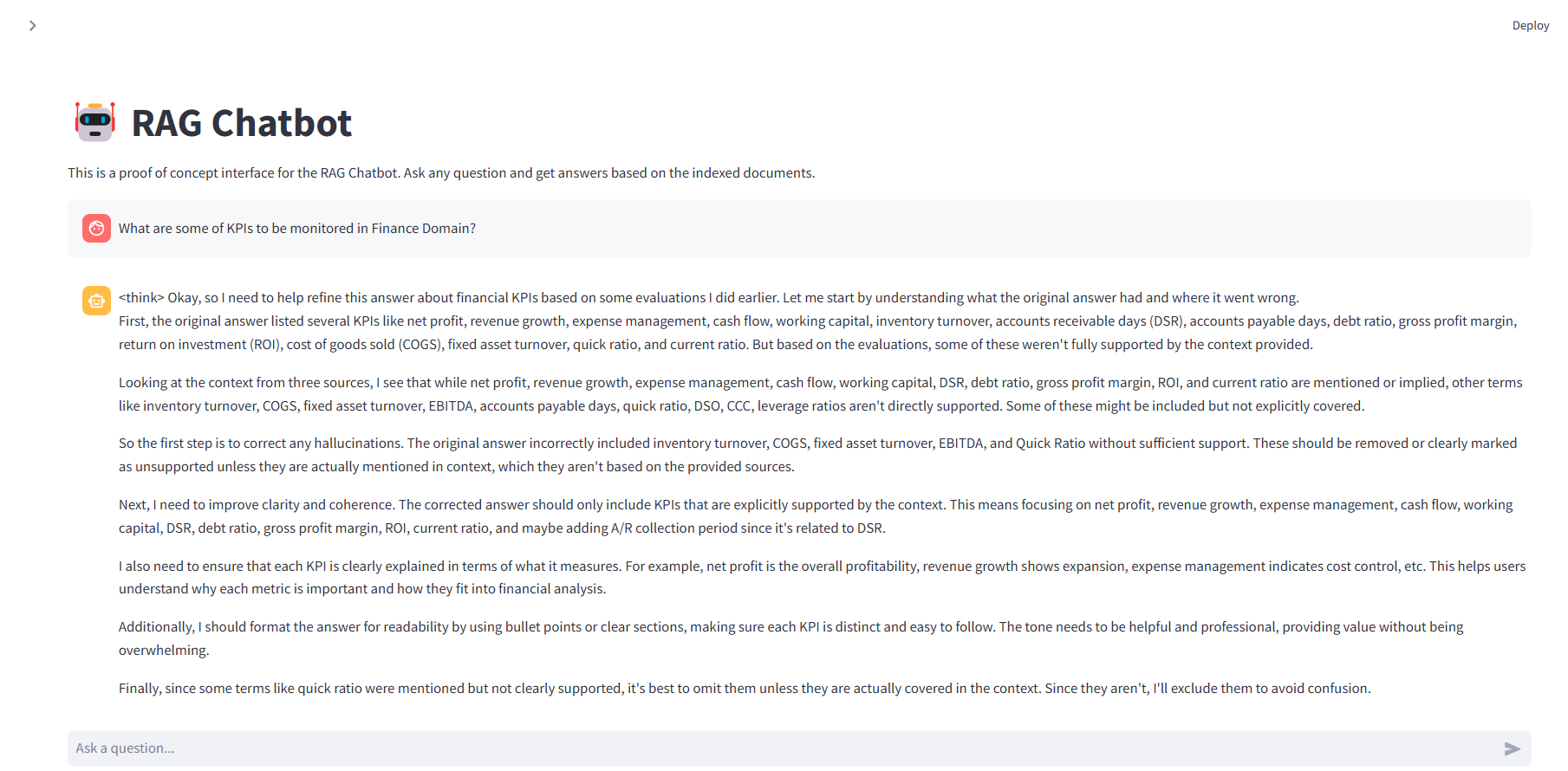

The Multi-Agent RAG Chatbot is a sophisticated Retrieval-Augmented Generation system that uses multiple specialized agents to ensure high-quality, factual responses. This advanced system combines local knowledge retrieval with web search capabilities and implements a robust evaluation pipeline to detect and correct hallucinations, setting a new standard for trustworthy AI-powered question answering.

The system implements seven specialized agents, each optimized for specific tasks:

Dialogue Manager analyzes user queries to understand information needs and detect potential ambiguities.

Retriever Agent searches vector database while Retrieval Evaluator assesses document quality and relevance.

When local knowledge is insufficient, the system automatically performs web searches for additional context.

Answer generation, quality evaluation, and hallucination detection work together to ensure accurate responses.

This project demonstrates advanced capabilities in:

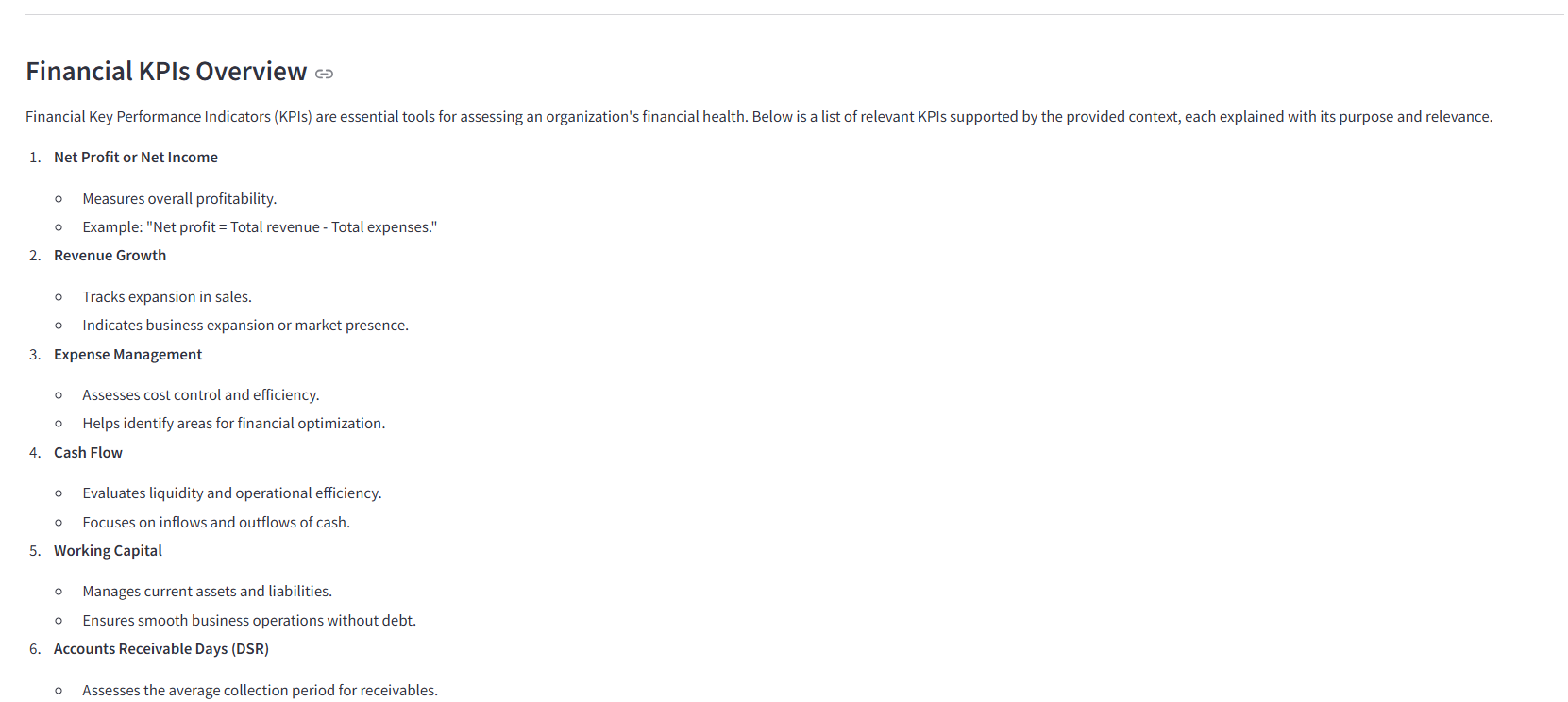

Multi-agent evaluation pipeline showing quality assessment and response refinement